ChatGPT-Companionship

Why Every AI Company Should Prioritize Emotional Retention

Table of Contents

While OpenAI started primarily as a research-focused company aiming to advance artificial intelligence, ChatGPT, OpenAI's consumer product, has rapidly become an essential daily companion for millions worldwide, recently surpassing 500M+ WAU. This explosive growth is impressive, yet sustaining it is difficult. OpenAI’s recent product updates highlight a clear strategic shift: moving beyond utility toward building deeper user retention through companionship-like interactions. And by transitioning ChatGPT into a companion, OpenAI is not simply enhancing user retention but potentially reshaping the entire landscape of human-AI interactions.

Why Companionship Matters

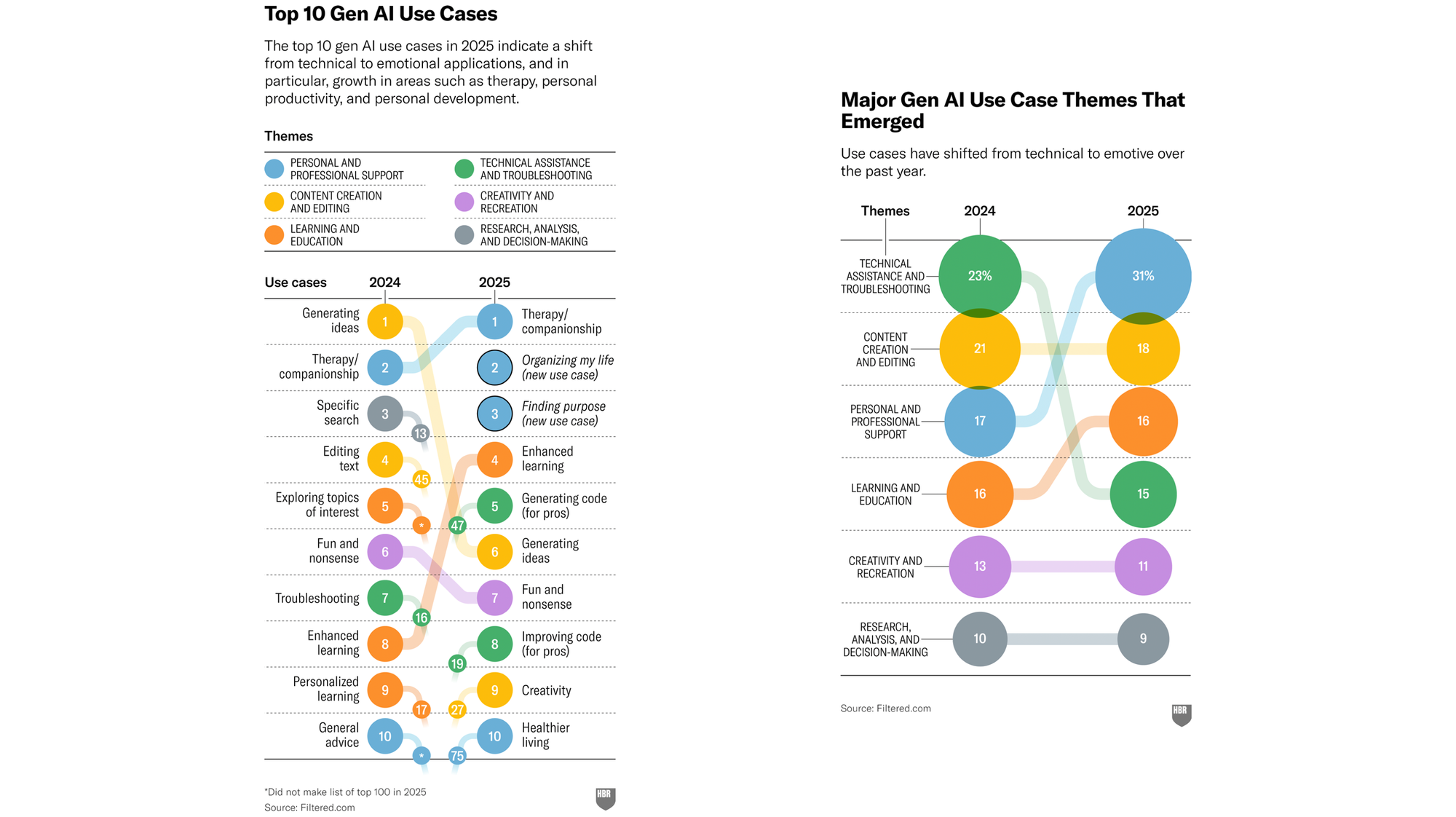

Recently, Harvard Business Review reported the top 10 Gen AI use cases in 2025. While technical assistance and troubleshooting – such as generating ideas and building code – dominated in 2024, personal and professional support use cases like therapy, companionship, and organizing life have emerged as central in 2025. Clearly, the era of AI companionship has arrived.

This shift isn't coincidental. It reflects deliberate strategic efforts by OpenAI and similar companies to build retention – making ChatGPT potentially the most retentive product in history.

Today, users are experiencing unprecedented loneliness. Cigna 2024 Loneliness Index revealed that 58% of adults feel chronically lonely. The U.S. Surgeon General has described loneliness as a public health epidemic, noting its prevalence even before the pandemic. Loneliness creates emotional gaps that tools alone can’t fill. Companionship, by contrast, taps directly into emotional needs, creating stronger, more persistent retention than purely utilitarian features ever could. Personality products like Character.AI's significant engagement (2h/day) built confidence that this could be solved by AI. In response, OpenAI decided to make ChatGPT a companion.

How ChatGPT Became Your Companion

Unlike Google’s productivity-centric Gemini or Anthropic’s ethically-driven Claude, ChatGPT explicitly emphasizes emotional engagement and companionship as a retention strategy. OpenAI could've pursued retention through superior performance or productivity integrations. However, companionship uniquely triggers emotional engagement, which is psychologically more enduring. Recent feature updates are all related to strengthening the relationship between ChatGPT and its users.

Model-side Enhancements

- Recent GPT-4o responses frequently include follow-up questions, a behavior rarely seen in previous versions. This encourages longer interactions, helping users develop stronger relationships with the model. It also has multiple secondary benefits, such as improved user onboarding and deeper exploration, which deserve further discussion in another post.

- On the GPT-4.5 launch, OpenAI emphasized the emotional capabilities of larger language models. This implies that as model size increases, the emotional intelligence and ability to understand nuanced language would be better. These abilities strengthen companionship by enhancing empathy toward individuals.

Product-side Enhancements

- The Memory feature, recently updated significantly, is a crucial capability for companionship. Long-term companionship arises from memory-driven personalization. This is also important for quick, simple prompting, as model already understands the contexts about you. Sam Altman’s recent discussion clearly indicates the ideal vision for this feature: entire-life personalization.

Sam Altman: We Want ChatGPT to Remember Your Entire Life

— Overlap: Business & Tech (@Overlap_Tech) May 14, 2025

"The ideal state is a very tiny reasoning model with a trillion tokens of context that you put your whole life into.

The model never retrains. The weights never customized, but it can reason across your whole life context… pic.twitter.com/QRYvhD5yIX

- Scheduled tasks encourage regular, proactive interactions with ChatGPT. Although currently under-appreciated, such features lay groundwork for personalized, AI-first communication, integrating deeply into users' daily routines – which are critical for building consistent companionship. Imagine the AI chatbot that can send you a beginning message every day, considering the context based on your calendar, email, and other messages. In fact, Dot from New Computer has already built this feature inside their own application. This enables customized push notifications (which will bring high CTR and retention) and builds the foundation of personal agency.

- Monday, OpenAI’s recent personality experiment, also demonstrates the company’s shift toward enhancing companionship. As companionship deepens, multiple personas tailored to users’ diverse needs become essential.

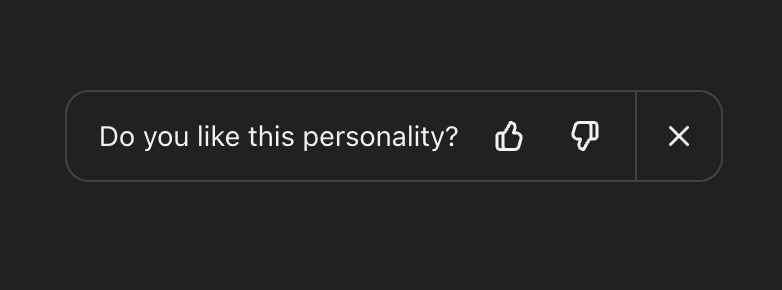

- RLHF (Reinforcement Learning with Human Feedback) is central to these improvements, continuously driving ChatGPT’s evolution. In fact, the feedback popup from ChatGPT modified from 'Do you like this response?' to 'Do you like this personality?'.

These implementations reinforce one another to create powerful emotional dependencies. Consequently, we get a really powerful companionship product. ChatGPT offers unconditional positive attention, responding to emotional needs without judgement or fatigue. Early data, including a 2025 MIT Media Lab study, confirms that AI companionship initially alleviates loneliness, although it also warns of potential emotional dependence. (Also, further research suggested that this alleviated loneliness lasted only 7 days, but this might be improved over time) Still, the attraction remains undeniable. By prioritizing emotional resonance alongside utility, ChatGPT solidifies its place in users' daily lives, reshaping not just user behavior but the landscape of digital companionship itself.

Challenge: Building Personality

As the importance of companionship rises, the risk of over-dependency also grows. The recent issues with GPT-4o clearly illustrate these shortcomings:

We have rolled back last week’s GPT‑4o update in ChatGPT so people are now using an earlier version with more balanced behavior. The update we removed was overly flattering or agreeable—often described as sycophantic.

(...)

However, in this update, we focused too much on short-term feedback, and did not fully account for how users’ interactions with ChatGPT evolve over time. As a result, GPT-4o skewed towards responses that were overly supportive but disingenuous.

I call this phenomenon the 'Uncanny Valley of LLM personality' – a stage where attempts at human-like emotional responses become unsettlingly artificial rather than comforting. This highlights the challenge of humanizing AI: too little personality feels cold, but too much becomes artificial and unsettling. Navigating this nuanced emotional territory will be crucial for sustainable companionship.

In the document, they said 'focused too much on short-term feedback' was the main reason why this happens. I believe as long-term reinforcement learning datasets expand, this risk is likely to decrease. We haven’t fully encountered it yet, but the ‘just-right’ balance in flattering responses may be so narrow that frequent recalibrations become necessary after each model update. Also, to manage emotional dependency risks, OpenAI and similar companies must proactively set clear boundaries and develop best practices for responsible companionship.

Be a Friend, Not a Tool

Companionship’s potential extends beyond personal interactions. It could also revolutionize professional domains. Google’s Gemini, for example, could pioneer business companionship, embedding itself deeply into daily tasks, leveraging existing SaaS dominance to become indispensable to businesses.

Google currently prioritizes seamless integration into productivity workflows, aiming to retain users by embedding AI deeply into daily tasks and collaborative processes. Imagine Gemini analyzing your work behaviors, guiding your daily tasks, and aligning your activities with your company’s core principles, goals, and interpersonal dynamics. This is exactly what business-oriented companionship could look like. Such integration wouldn’t merely boost productivity—it would also make Google’s subscriptions indispensable. Google is uniquely positioned for this due to its already dominant suite of SaaS tools.

AI technology is rapidly becoming commoditized. Soon, simply having powerful AI capabilities won’t be enough to retain users. Instead, what will distinguish leading products is the ability to foster genuine, lasting relationships through personalization and companionship. Rather than focusing solely on what AI can do, companies should prioritize how AI makes users feel – valued, understood, and supported. In the emerging age of AI companionship, the winners will be those who treat their users like friends, not just customers using a tool.

MJ Kang Newsletter

Join the newsletter to receive the latest updates in your inbox.